SDTM (Study Data Tabulation Model) programming is an important part within clinical research, organizing and structuring clinical trial data. When it comes to data management in clinical research, the importance of ensuring the accuracy and consistency is monumental. But how does SDTM programming work? What are the most important SDTM variables?

This blog explores everything about SDTM mapping process, explains SDTM variables, and outlines the steps. Read on…

How Does SDTM Programming Work?

SDTM programming is the backbone of data organization in clinical trials. It transforms raw clinical data into a standardized format, allowing for easier analysis and submission to regulatory authorities like the FDA.

The SDTM structure is set in a manner that the data from different sources and studies can be consistently interpreted and compared. This process is essential in maintaining the integrity and reliability of clinical trial outcomes.

What Is The SDTM Mapping Process?

The SDTM mapping process helps convert raw clinical trial data into the SDTM format. The process involves mapping raw data variables in to standardized SDTM variables, making sure the data adheres to predefined structures and formats.

The SDTM mapping process is integral to clinical data management that involves converting raw clinical trial data into a standardized format following the Study Data Tabulation Model (SDTM), which is defined by the Clinical Data Interchange Standards Consortium (CDISC).

The purpose of SDTM is to make sure that data submitted to regulatory authorities, like the FDA, is in a consistent, easily reviewable format. Here’s a breakdown of the SDTM mapping process:

Step 1. Analysing the Raw Data

- Clinical trial data is collected from multiple sources like case report forms (CRFs), labs, medical devices, and patient-reported outcomes.

- The first step is to get a clear understanding of this raw data, including its structure, variables, and meaning.

Step 2. Define the SDTM Domains

SDTM defines several domains or categories for organizing clinical data, such as the following:

- Demographics (DM): Basic participant information.

- Adverse Events (AE): Any adverse effects experienced by participants.

- Laboratory Data (LB): Lab test results.

- Medical History (MH): Participant’s medical history.

- Choose the relevant SDTM domains based on the type of study.

Step 3. Mapping Raw Data to SDTM Domains

- Map variables from the raw data to the corresponding variables in the SDTM domains.

- For example, if your raw data has a field for age, you would map it to the SDTM AGE variable within the Demographics (DM) domain.

- Special care is needed to ensure that data fits the SDTM naming conventions and follows its rules for formatting and terminology.

Step 4. Handling Controlled Terminology

- SDTM uses controlled terminology (standardized terms) for specific variables, such as units of measure or disease terms.

- Ensure that data in the raw dataset conforms to this controlled terminology by mapping it accordingly.

Step 5. Validation and QC

- Once the raw data is mapped to SDTM, it’s critical to validate the dataset against SDTM compliance standards using automated validation tools like Pinnacle 21 or OpenCDISC.

- The goal is to identify and correct errors such as missing data, incorrect formats, or inconsistencies.

Step 6. Documentation

- Create a Define.xml file that documents the SDTM mapping, including explanations of how raw data was mapped to SDTM variables, metadata about the datasets, and controlled terminology used.

- This file is typically submitted to regulatory authorities along with the SDTM datasets.

Step 7. Submission to Regulatory Authorities

- After validation, the SDTM datasets (along with the Define.xml) are submitted to regulatory bodies like the FDA or EMA as part of the clinical trial data submission package.

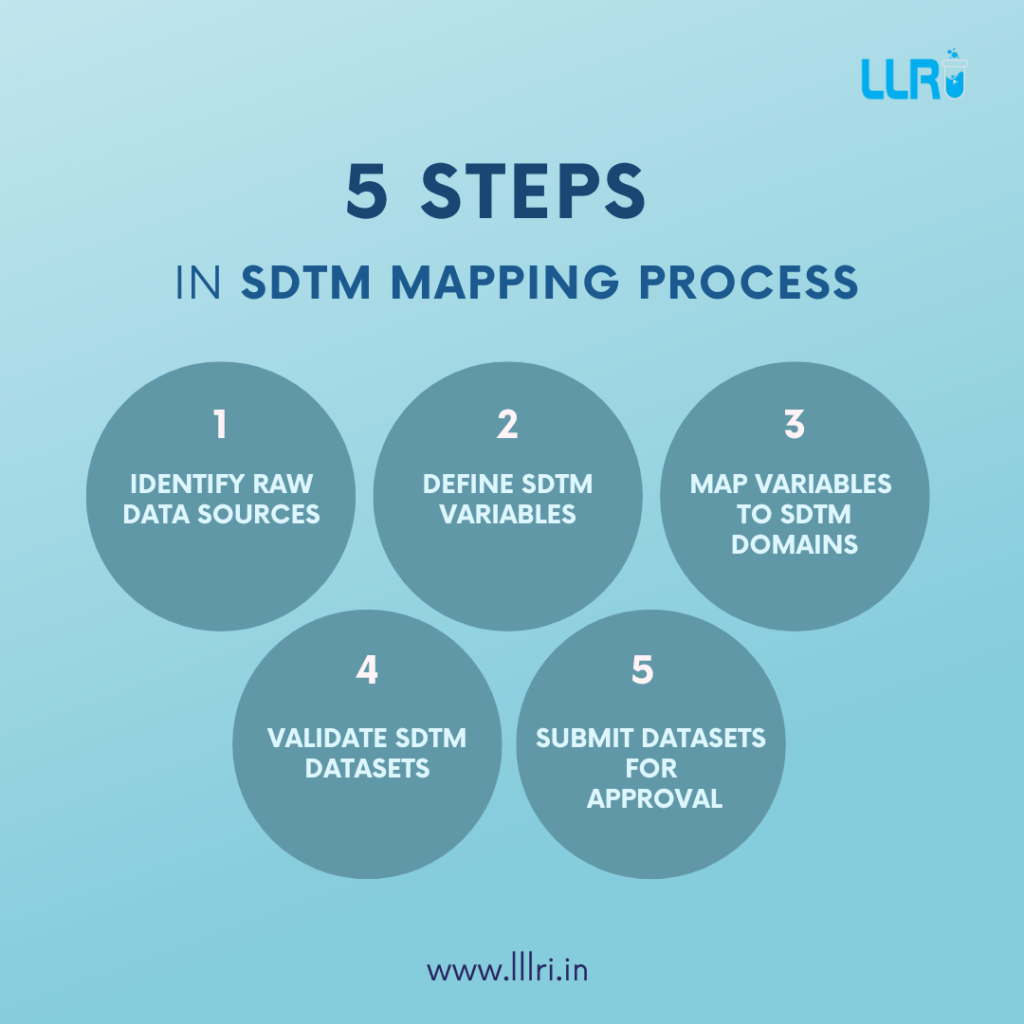

Here’s a simplified version for the SDTM mapping process for your understanding:

| Steps | Description |

| Identify Source Data | Determine the raw data sources that need to be mapped. |

| Define SDTM Domains | Identify the appropriate SDTM domains for the data. |

| Map Variables | Match raw data variables to SDTM variables. |

| Transform Data | Convert the data into the required SDTM format. |

| Validate Mapping | Check the accuracy and consistency of the mapping process. |

| Generate SDTM Datasets | Produce the final SDTM datasets for submission. |

The SDTM mapping process eases the data process, where the data is aligned with the regulatory standards and is ready for submission to health authorities.

What About SDTM Variables?

SDTM variables are the building blocks of SDTM datasets. Each variable represents a specific piece of information in the clinical trial data, such as subject ID, age, gender, or laboratory test results. These variables are standardized across all studies, making it easier to analyze and compare data.

Key SDTM variables include the following:

- Subject ID (SUBJID): Unique identifier for each participant.

- Visit Number (VISITNUM): Identifies the visit during which data was collected.

- Test Code (TESTCD): Standardized test name used in laboratory domains.

- Result (STRESC): Standardized result in character format.

- Study Day (DY): Indicates the number of days since the trial started.

What Are The Steps In SDTM Programming?

The steps in SDTM programming involve several key phases that ensure the successful transformation of raw data into SDTM-compliant datasets. Knowing these steps is central to grasping how SDTM programming works.

- Data Collection

The first step is collecting raw data from various clinical research sources, including patient data, lab results, and adverse events. This data typically originates from Electronic Data Capture (EDC) systems used in clinical trials. - SDTM Mapping

This step involves aligning the raw data with the appropriate SDTM domains and SDTM variables through the SDTM mapping process. Clinical research professionals use specific mapping tools and software to ensure compliance with CDISC guidelines. - Data Validation

After mapping the data, validation checks are performed to ensure that the mapped data adheres to regulatory standards. Tools like Pinnacle 21 are often used to check the accuracy of the mapped datasets. - Dataset Creation

Once validation is complete, SDTM datasets are created in the SDTM format. These datasets are standardized and contain all the required SDTM variables across different domains. - Submission Preparation

Finally, the SDTM datasets are prepared for submission to regulatory bodies like the FDA. This step includes packaging the datasets, associated documentation, and ensuring that all data conforms to regulatory requirements.

Moreover, each of these steps is important in maintaining the quality and integrity of the data, making it suitable for regulatory submission.

On A Final Note…

Having knowledge on how SDTM process is important for professionals in clinical research. Whether you’re taking a clinical research course or considering enrolling in one, mastering the SDTM mapping process and SDTM variables can improve your skills.

Clinical research training centers can help provide assistance in learning these, as they are crucial for the clinical research field. If you’re looking for the best institute for PG Diploma in Clinical Research, be sure to check out the course offerings and clinical research course fees to make an informed decision.

FAQs

What is SDTM programming?

SDTM programming is the process of transforming raw clinical trial data into a standardized format (SDTM) that is used for regulatory submission and data analysis.

What is the creation process of SDTM?

The creation process of SDTM involves collecting raw data, cleaning it, mapping it to SDTM variables, validating the data, and generating SDTM-compliant datasets.

What is the basic concept of SDTM?

The basic concept of SDTM is to provide a standardized format for clinical trial data, ensuring consistency, accuracy, and regulatory compliance.

What is the SDTM format of data?

The SDTM format is a predefined structure for clinical trial data that includes standardised domains, variables, and labels to ensure uniformity and ease of analysis.